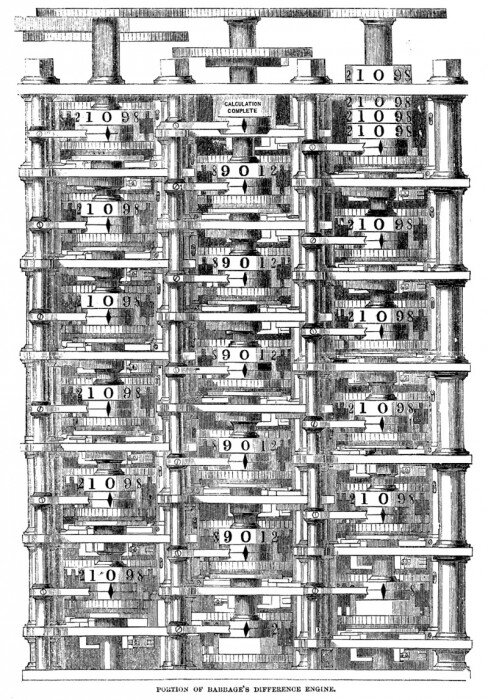

Computers are used to make decisions in an increasing number of domains. There is widespread agreement that some of these uses are ethically problematic. Far less clear is where ethical problems arise, and what might be done about them. This paper expands and defends the Ethical Gravity Thesis: ethical problems that arise at higher levels of analysis of an automated decision-making system are inherited by lower levels of analysis. Particular instantiations of systems can add new problems, but not ameliorate more general ones. We defend this thesis by adapting Marr’s famous 1982 framework for understanding information-processing systems. We show how this framework allows one to situate ethical problems at the appropriate level of abstraction, which in turn can be used to target appropriate interventions.

Read MoreJoin us at the 4th AAAI/ACM Conference on AI, Ethics, and Society - 19-21 May 2021!

Read MoreSome realistic models of neural spiking take into account spike timing, yet the practical relevance of spike timing is often unclear. I show that polychronous networks reflect a distinct organisational principle from notions of pluripotency, redundancy, or re-use, and argue that properly understanding this phenomenon requires a shift to a time-sensitive, process-based view of computation.

Read MoreColin Klein (ANU), Andrew Barron (Macquarie) and Marta Halina (Cambridge) have been awarded a grant to study "The major transitions in the evolution of cognition" from the Templeton World Charity Foundation. This $1M USD grant will fund research into the major shifts in computational organisation that allowed evolving brains to process information in new ways. Researchers at the ANU, led by CI Klein, will explore the philosophical foundations of computational neuroscience.

Read MoreThis study represents the first systematic, pre-registered attempt to establish whether and to what extent the YouTube recommender system tends to promote radical content. Our results are consistent with the radicalization hypothesis. We discuss our findings, as well as directions for future research and recommendations for users, industry, and policy-makers.

Read MoreMind Design III will update Haugeland's classic reader on philosophy of artificial intelligence for the modern era. It will contain a mix of classic and contemporary readings, along with a new introduction to contextualise the topic for students. Expected publication date Q1 2021.

Read MoreIn a paper published in Plos One, Colin Klein and co-authors shed light on the online world of conspiracy theorists, by studying a large set of user comments. Their key findings were that people who eventually engage with conspiracy forums differ from those who don’t in both where and what they post. The patterns of difference suggest they actively seek out sympathetic communities, rather than passively stumbling into problematic beliefs.

Read MoreSeth Lazar and Colin Klein question the value of basing design decisions for autonomous vehicles on massive online gamified surveys. Sometimes the size of big data can't make up for what it omits.

Read MoreColin Klein was interviewed by ABC Drive and 2CC Canberra about conspiracy theories surrounding COVID-19 and the role of online information platforms such as twitter in propagating misinformation.

Read MoreAs humans, our skills define us. No skill is more human than the exercise of moral judgment. We are already using Artificial Intelligence (AI) to automate morally-loaded decisions. In other domains of human activity, automating a task diminishes our skill at that task. Will 'moral automation' diminish our moral skill? If so, how can we mitigate that risk, and adapt AI to enable moral 'upskilling'? Our project, funded by the Templeton World Charity Foundation, will use philosophy, social psychology, and computer science to answer these questions.

Read MoreColin Klein and Mark Alfano began work July 2019 on a $300,000 ARC grant to investigate ‘Trust in a social and digital world’. By using the tools of social epistemology, virtue epistemology, and network science, this project will identify how individuals should distribute their trust when embedded in epistemically hostile environments.

Read More